For education apps, summer is an important time for growth due to increased free time, the need for remedial learning, the desire for enrichment, test preparation requirements, the flexibility of learning, and marketing opportunities.

By catering to these factors, education apps can experience significant growth during the summer period.

* To view more of our case studies on education app growth: Education App Case Study - Maximizing Organic Downloads through Effective Keyword Research

Conversion rate optimization and keyword optimization are the two primary components of Google Play ASO (App Store Optimization) strategy. Today we will mainly discuss how to effectively design and plan A/B tests for education apps using Google Play Store Listing Experiments. These experiments involves both converation rate optimization and keyword optimization. The aim is to ensure that visitors have the best and most convincing experience on your app store listing page, leading to increased downloads and improved conversion rates.

Through default image experiments, you can test the store listing images of your app's default language version. The experiment can include various variants of the app's icon, featured graphic, screenshots, and promotional videos.

* Related insights: How to Design a Powerful App Icon to Grab Customers' Attention?

If your app's store listing has only one language version, the default image experiment will be shown to all users.

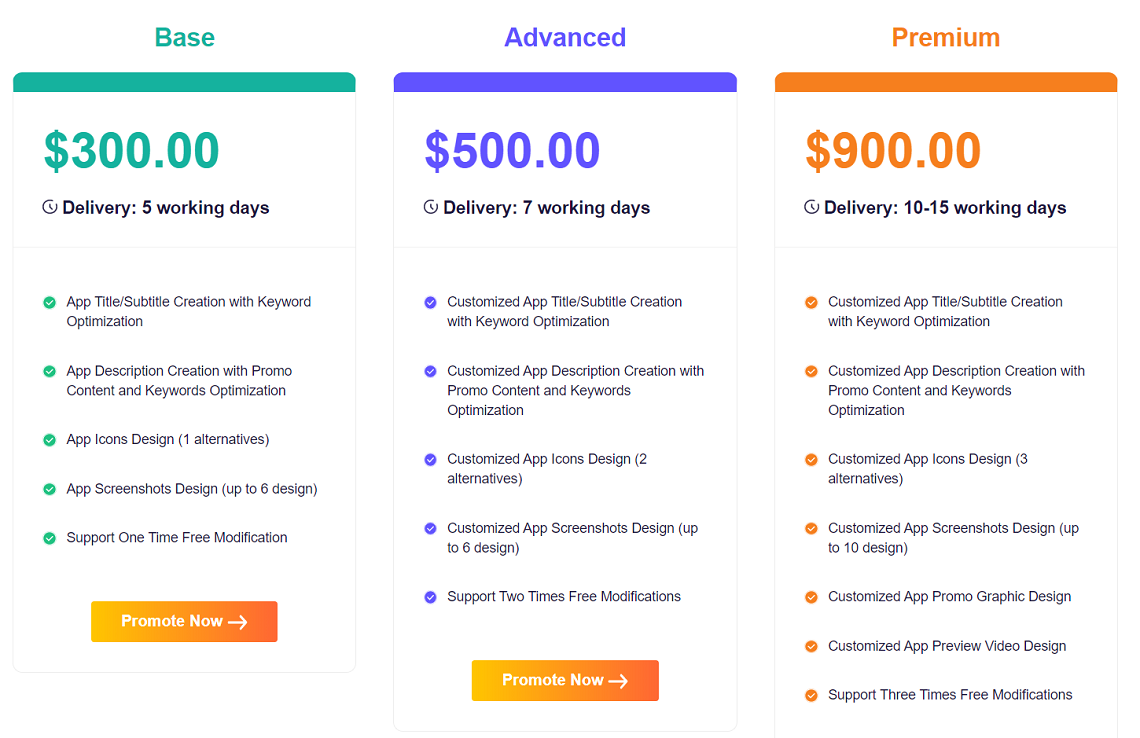

* To view what services ASOWorld provides, and what's the result-driven solution for app growth.

If you have added localized image resources for a specific language version, users viewing the app through that language version will be excluded from the default image experiment. For example, if the default language of your app is English, and you have a localized featured graphic specifically for French, the users viewing the app through the French language version will be excluded from the experiment (even for testing the app icon).

The impact of the store listing experiment can be measured using metrics such as first-time installers or one-day retention users. These metrics are reported hourly and you can choose to receive email notifications when the experiment is completed.

As a reminder, ASOWorld offers a professional optimization service for visual elements. This screenshot below is the sample design of the ASO Service.

Through localized experiments, you can test the app's icon, featured graphics, screenshots, promotional videos, and textual information in up to five language versions. Only users viewing the app's store listing in the selected language will see the experiment variants.

* Related reading: Challenges You Might Face During App localization And How To Solve Them

If the app's store listing provides only one language version, only users viewing the app in the default language will be able to see the localized experiment.

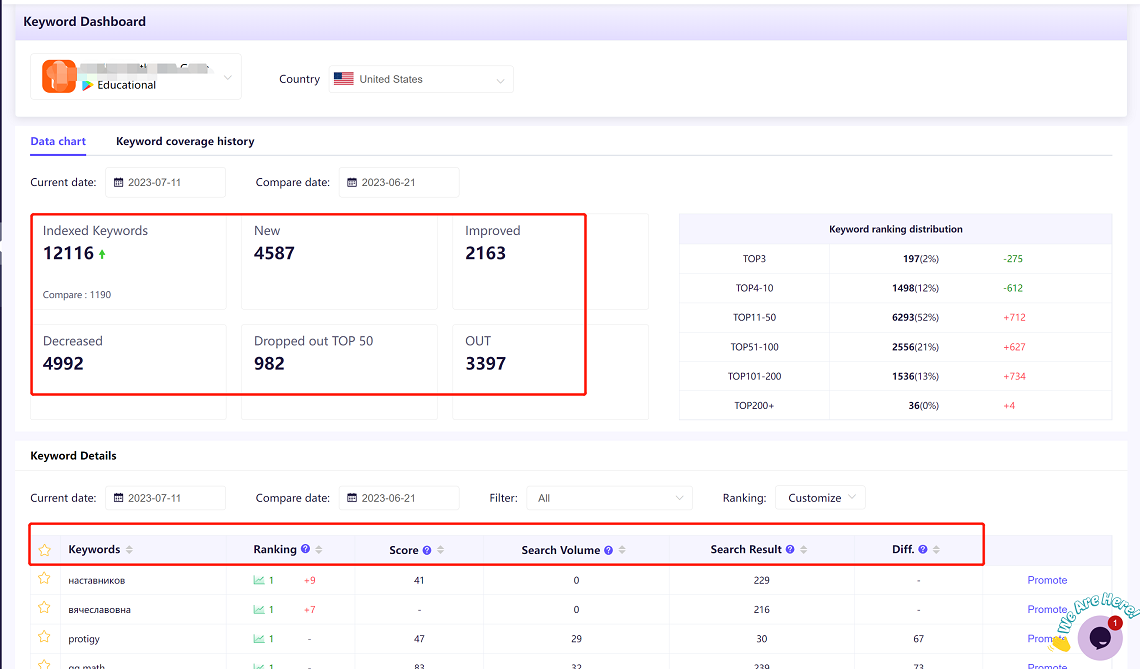

We offer free ASO tools to help developers quickly find the most valuable keywords, identify promising keywords, and provides data on search volume score, difficulty score to rank in the top 10 search results, and more. Developers can incorporate these keywords into their A/B test strategies to get better store performance.

In addition, Google also allows you to run A/B tests for custom store listing pages. With custom store listings, you can tailor the app's listing to specifically target user segments in selected countries/regions.

* Related reading: App Marketing Strategy - How to Leverage Custom Product Pages to Increase Conversion Rates in Paid Acquisition Campaigns?

You can run up to 50 experiments simultaneously for custom store listings. However, it's worth noting that you can run experiments on custom store listings while conducting real-time experiments on the primary store listing worldwide

To effectively conduct A/B testing on Google Play, we summarize 12 best practices and pitfalls to avoid when running A/B tests. Start learning now!

The Minimum Detectable Effect (MDE) is another new feature that helps reduce false positives in A/B testing. Google defines MDE as "the minimum difference between a variant and the control group that needs to be observed to determine which group performs better. If the actual difference is lower than this value, your experiment will be considered inconclusive." For example, if you set the MDE to 5% and your app's original version has a conversion rate of 45%, your variant would need at least a 47.25% conversion rate to be declared a winner (45% x 1.05% = 47.25%). Currently, you can choose an MDE between 0.5% and 6%. Smaller MDE values require larger sample sizes to detect changes. If your app is new and hasn't acquired many installations, it is recommended to set a higher MDE since the required sample size will be smaller. If you have a more mature app with a large number of downloads, it is advisable to set a lower MDE for more accurate results.

Get a good start for your app optimization with practical ASO guideline!

Want to get the latest Guides & Insights from ASOWorld?

Related posts